HPC workloads requiring NFS are becoming increasingly common in Microsoft Azure, but choosing the right solution for these workloads can be complex. Factors like throughput, latency, reliability, and cost all need to be carefully evaluated to ensure the best possible performance and value. This blog post will provide an overview of the different NFS solutions available in Azure, as well as best practices for implementing and optimizing these solutions based on your specific workload requirements.

NFS Solutions in Azure

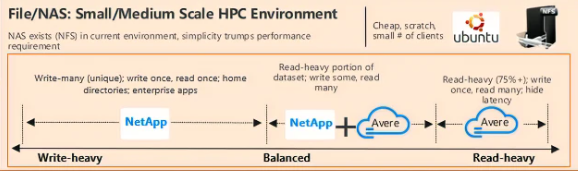

There are a few different solutions available for HPC workloads requiring NFS in Microsoft Azure. One of the most popular options is NetApp, which provides a cloud-based storage solution that allows organizations to create and manage their data storage environments. NetApp is optimized for Write-Many (Unique) workloads where data is written once and read once.

Another option for NFS workloads is Avere, which was acquired by Microsoft in 2018. Avere is a high-performance file system that is designed to provide fast access to data for HPC workloads, and can be used in conjunction with NetApp to support balanced read/write workloads. For workloads that are primarily read-heavy, Avere can be a good solution for providing fast access to data.

For some low-throughput workloads, it may be more cost-effective to self-host an NFS server using Linux or other technologies instead of using commercial solutions like NetApp or Avere. This can be a suitable solution for simple or small-scale workloads that don’t require the advanced features or high-performance capabilities of commercial NFS solutions.

Evaluating Workload Requirements

When evaluating HPC workloads that require NFS, there are several factors that need to be considered to determine the best solution:

- Throughput: The amount of data that needs to be read or written per second is one of the most important factors to consider when selecting an NFS solution. Higher throughput requirements often require more advanced and expensive solutions.

- Latency: Some HPC workloads require extremely low latency to achieve the desired performance levels. In these cases, solutions with low latency storage and networking will be required.

- Reliability: Data integrity is critical for HPC workloads that require NFS. Solutions with features like data replication and backup are essential to ensure the reliability and recoverability of data in the event of a failure.

- Cost: Commercial solutions like NetApp and Avere can be expensive, particularly for high-performance workloads. Self-hosting an NFS server using Linux or other technologies can be a more cost-effective option for low-throughput workloads, but may require more maintenance and support.

Based on these requirements, different scenarios and solutions can be identified. For example, an HPC workload requiring extremely high throughput and low latency may require a combination of NetApp and Avere. On the other hand, a lower-throughput workload with lower latency requirements may be well-suited for a self-hosted NFS solution using Linux.

It’s important to carefully evaluate the specific requirements of each workload to determine the best solution or combination of solutions to meet those needs.

Best Practices for Implementing NFS in Azure

Once you’ve determined the best NFS solution for your HPC workload in Microsoft Azure, there are several best practices to keep in mind when implementing and optimizing your solution:

- Optimize networking: Network throughput is a critical factor in NFS performance. Consider using Azure ExpressRoute or VPN to ensure fast and reliable connectivity to your NFS solution.

- Optimize storage configuration: Ensure that the storage configuration is optimized for the specific NFS workload requirements. For example, consider using high-performance disks like Azure Ultra Disk or SSDs, or increasing the number of disks in a RAID configuration.

- Secure your NFS solution: Security is a top priority for any NFS solution. Consider using Azure Security Center to monitor and protect your NFS infrastructure against security threats.

- Monitor performance: Regularly monitor NFS performance using tools like Azure Monitor or other monitoring solutions to identify and resolve performance issues before they impact workload performance.

- Troubleshoot performance issues: When performance issues do arise, make sure you have effective troubleshooting procedures in place to quickly identify and resolve them. Be sure to collect and analyze system logs and performance metrics to diagnose the root cause of any issues.

By following these best practices, you can ensure that your NFS solution in Azure is optimized for performance, reliability, and security.

Choosing the right solution for HPC workloads requiring NFS in Microsoft Azure can be a complex process. Factors like throughput, latency, reliability, and cost must all be carefully considered to ensure the best possible performance and value. Whether you choose a commercial solution like NetApp or Avere or decide to self-host your own NFS server using Linux or other technologies, it’s important to optimize your solution for performance, security, and reliability. By following the best practices outlined in this post, you can ensure that your NFS solution in Azure is optimized for your specific workload requirements and provides the best possible performance, reliability, and value.

Here are some links for the documentation that might help you get started:

- Azure NetApp Files documentation: https://docs.microsoft.com/en-us/azure/azure-netapp-files/

- Avere vFXT for Azure documentation: https://docs.microsoft.com/en-us/azure/avere-vfxt/

- Linux documentation for NFS: https://wiki.linux-nfs.org/wiki/index.php/Main_Page